16 Unsupervised Learning

This chapter covers the eighth learning objective along with one additional topic of correlation analysis.

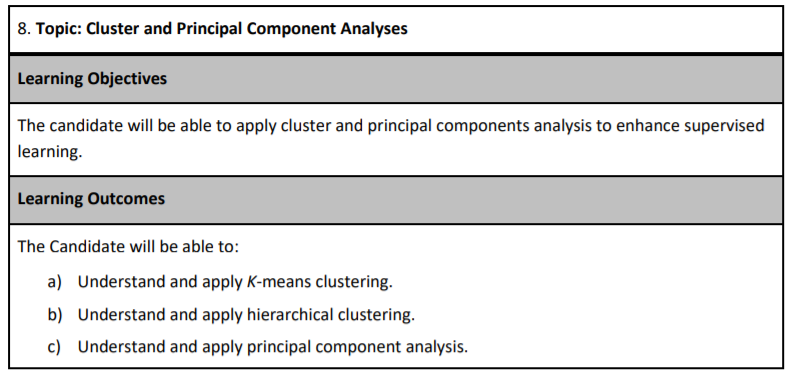

As of the fall of 2020, there have been eight different PA exams. The table below shows the types of questions in each. Your exam in December will likely have a question on either k-means or hierarchical clustering because these topics have not frequently been tested. The Hospital Readmissions sample project did have one question related to k-means, but that was in 2018. Our ExamPA.net practice exams contain questions on both topics.

16.1 Types of Learning

You are in a classroom and your teacher is giving a lesson which has a correct answer, such as 1 + 2 = 2. This is called supervised learning. The teacher is the supervisor, who is responsible for minimizing the number of incorrect answers. When a question is answered correctly, there is a reward, and when a mistake is made, there is a penalty. In machine learning, we measure the performance using metrics such as RMSE, and we say that the model is a good “fit” when this metric is low.

Figure 16.1: Supervised (Left), Unsupervised (Right)

However, if you are given a pile of Legos and told to build whatever you want, that would be unsupervised learning. There are no right or wrong answers and to goal is to explore patterns in the data. There are no performance metrics, and it is impossible to say whether a model is a good fit or not.

Up to this point, you have been working with supervised learning. This is the major focus of PA because predictive analytics often is used to predict a future outcome. We now move on to the unsupervised learning algorithms. Here are all of the learning algorithms on PA:

| Supervised | Unsupervised |

|---|---|

| GLM | Correlation analysis |

| Lasso, Ridge, and Elastic Net | Principal component analysis (PCA) |

| Decision Tree | K-means clustering |

| Bagged Tree | Hierarchical clustering |

| Boosted Tree |

Semi-Supervised Learning is a mix of the two. One example of this is using PCA or Clustering to create features used in a supervised model.

16.2 Correlation Analysis

- Two variables are said to be positively correlated when increasing one tends to increase the other and negatively correlated when increasing one decreases the other

- Correlation is unsupervised because it does not depend on the target variable

- Correlation is only defined for numeric variables. It is possible to calculate the correlation between binary variables if coded as 0 or 1, but these questions have never appeared on PA.

16.2.1 Correlation does not equal causation

This is a common saying of statisticians. Two things happening at the same time are not sufficient evidence to suggest that one causes the other. A spurious correlation is when two unrelated variables have a positive or negative correlation by random chance.

Examples:

Number of Drownings and Ice Cream Sales: Drownings rise when ice cream sales rise because the heat causes more people to go swimming and want ice cream.

Executives who say “please” and “thank you” more often enjoy better performance reviews: It might at first appear that this is due to brown-nosing or flattery, but a more likely explanation is that people who take the extra effort to be polite also take the extra effort to do their jobs well. People who have good performance may also be polite, but not all polite people are high performers.

16.3 Principal Component Analysis (PCA)

Often there are variables that contain redundant information. PCA is one method of simplifying them.

StatQuest. “Principal Component Analysis (PCA), Step-by-Step” YouTube, Joshua Starmer, 2 Apr 2018, https://www.youtube.com/watch?v=FgakZw6K1QQ

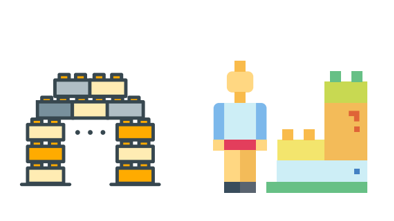

PCA is a dimensionality reduction method which reduces the number of variables needed to retain most of the information in a matrix. If there are predictor variables \((x_1, x_2, x_3)\), then running PCA and choosing the first two Principal Components (PCs) would reduce the dimension from 3 to 2. You can imagine this as observations in three-dimensional space being projected down onto a plane. The coordinates of this plane are \((\text{PC}_1, \text{PC}_2)\).

Each PC is a linear combination of the original variables. For example, PC1 might be

\[PC_1 = 0.2X_1 + 0.3X_2 - 0.2X_3\] The weights here are also called loadings or rotations, and are (0.2, 0.3, -0.2) in this example.

| Readings | |

|---|---|

| ISLR 10.2 Principal Component Analysis | |

| ISLR 10.3 Clustering Methods |

16.3.1 Example: US Arrests

In this example, we perform PCA on the USArrests data set, which is part of

the base R package. The rows of the data set contain the 50 states, in

alphabetical order:

library(tidyverse)

states=row.names(USArrests)

states## [1] "Alabama" "Alaska" "Arizona" "Arkansas" "California"

## [6] "Colorado" "Connecticut" "Delaware" "Florida" "Georgia"

## [11] "Hawaii" "Idaho" "Illinois" "Indiana" "Iowa"

## [16] "Kansas" "Kentucky" "Louisiana" "Maine" "Maryland"

## [21] "Massachusetts" "Michigan" "Minnesota" "Mississippi" "Missouri"

## [26] "Montana" "Nebraska" "Nevada" "New Hampshire" "New Jersey"

## [31] "New Mexico" "New York" "North Carolina" "North Dakota" "Ohio"

## [36] "Oklahoma" "Oregon" "Pennsylvania" "Rhode Island" "South Carolina"

## [41] "South Dakota" "Tennessee" "Texas" "Utah" "Vermont"

## [46] "Virginia" "Washington" "West Virginia" "Wisconsin" "Wyoming"The columns of the data set contain four variables relating to various crimes:

glimpse(USArrests)## Rows: 50

## Columns: 4

## $ Murder <dbl> 13.2, 10.0, 8.1, 8.8, 9.0, 7.9, 3.3, 5.9, 15.4, 17.4, 5.3, 2.6, 10.4, 7.2, 2.2, 6.0, …

## $ Assault <int> 236, 263, 294, 190, 276, 204, 110, 238, 335, 211, 46, 120, 249, 113, 56, 115, 109, 24…

## $ UrbanPop <int> 58, 48, 80, 50, 91, 78, 77, 72, 80, 60, 83, 54, 83, 65, 57, 66, 52, 66, 51, 67, 85, 7…

## $ Rape <dbl> 21.2, 44.5, 31.0, 19.5, 40.6, 38.7, 11.1, 15.8, 31.9, 25.8, 20.2, 14.2, 24.0, 21.0, 1…Let us start by taking a quick look at the column means of the data.

USArrests %>% summarise_all(mean)## Murder Assault UrbanPop Rape

## 1 7.788 170.76 65.54 21.232We see right away the the data have vastly different means. We can also examine the variances of the four variables.

USArrests %>% summarise_all(var)## Murder Assault UrbanPop Rape

## 1 18.97047 6945.166 209.5188 87.72916Not surprisingly, the variables also have vastly different variances: the UrbanPop variable measures the percentage of the population in each state living in an urban area, which is not comparable to the number of crimes committed in each state per 100,000 individuals. If we failed to scale the variables before performing PCA, then most of the principal components we observed would be driven by the Assault variable, since it has by far the largest mean and variance.

Thus, it is important to standardize the variables to have mean zero and standard deviation 1 before performing PCA. We will perform principal components analysis using the prcomp() function, which is one of several functions that perform PCA. By default, this centers the variables to have a mean zero. By using the option scale=TRUE, we scale the variables to have standard

deviation 1:

pca = prcomp(USArrests, scale=TRUE)The output from prcomp() contains a number of useful quantities:

names(pca)## [1] "sdev" "rotation" "center" "scale" "x"The center and scale components correspond to the means and standard deviations of the variables that were used for scaling prior to implementing PCA:

pca$center## Murder Assault UrbanPop Rape

## 7.788 170.760 65.540 21.232pca$scale## Murder Assault UrbanPop Rape

## 4.355510 83.337661 14.474763 9.366385The rotation matrix provides the principal component loadings; each column

of pr.out\$rotation contains the corresponding principal component

loading vector:

pca$rotation## PC1 PC2 PC3 PC4

## Murder -0.5358995 0.4181809 -0.3412327 0.64922780

## Assault -0.5831836 0.1879856 -0.2681484 -0.74340748

## UrbanPop -0.2781909 -0.8728062 -0.3780158 0.13387773

## Rape -0.5434321 -0.1673186 0.8177779 0.08902432We see that there are four distinct principal components. This is to be

expected because there are in general min(n − 1, p) informative principal

components in a data set with \(n\) observations and \(p\) variables.

Using the prcomp() function, we do not need to explicitly multiply the data by the principal component loading vectors in order to obtain the principal component score vectors. Rather the 50 × 4 matrix \(x\) has as its columns the principal component score vectors. That is, the $k^{thcolumn is the $k^{th principal component score vector. We’ll take a look at the first few states:

head(pca$x)## PC1 PC2 PC3 PC4

## Alabama -0.9756604 1.1220012 -0.43980366 0.154696581

## Alaska -1.9305379 1.0624269 2.01950027 -0.434175454

## Arizona -1.7454429 -0.7384595 0.05423025 -0.826264240

## Arkansas 0.1399989 1.1085423 0.11342217 -0.180973554

## California -2.4986128 -1.5274267 0.59254100 -0.338559240

## Colorado -1.4993407 -0.9776297 1.08400162 0.001450164We can plot the first two principal components using the biplot() function:

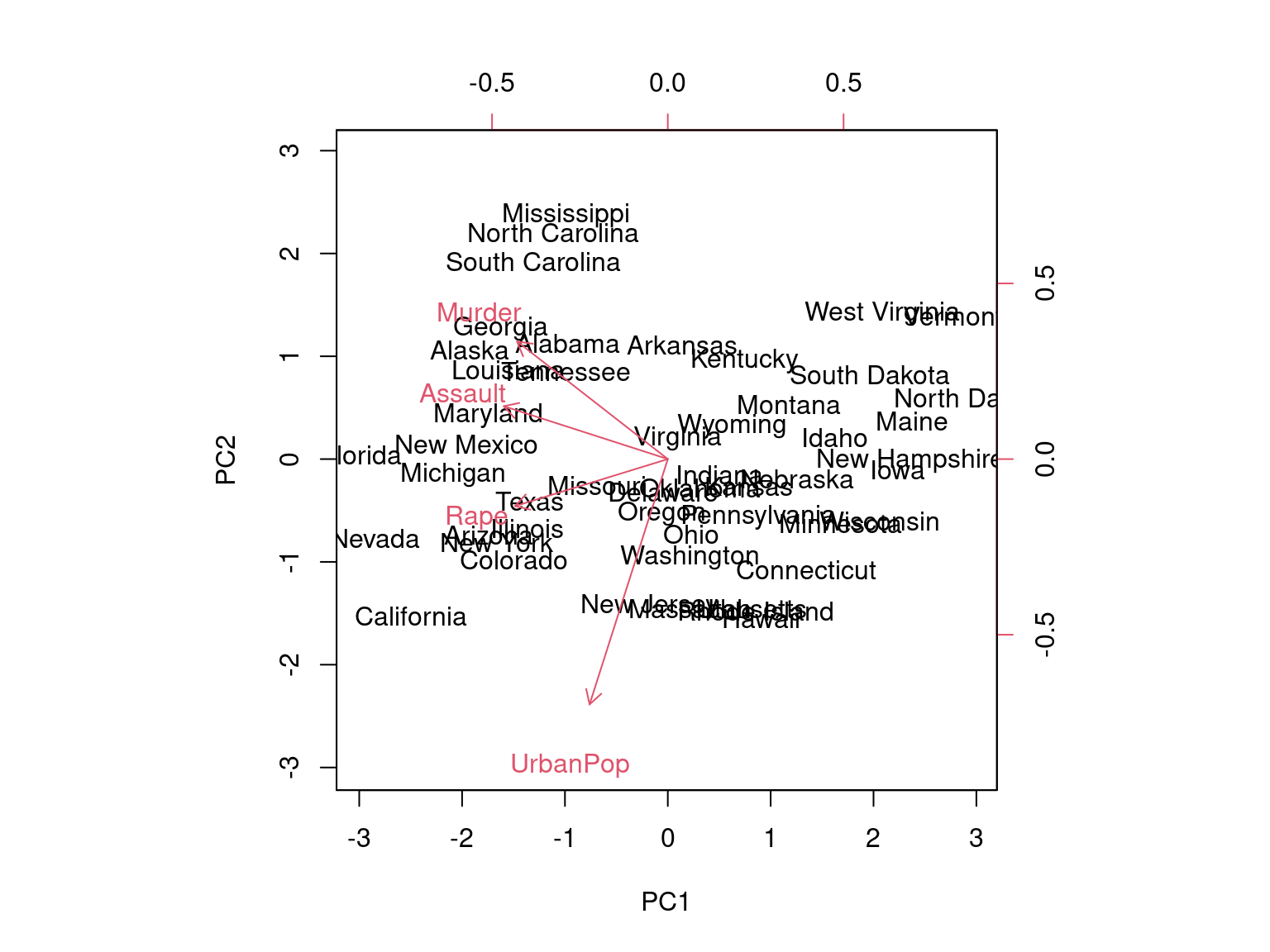

biplot(pca, scale=0)

The scale=0 argument to biplot() ensures that the arrows are scaled to represent the loadings; other values for scale give slightly different bi plots with different interpretations.

The prcomp() function also outputs the standard deviation of each principal component. We can access these standard deviations as follows:

pca$sdev## [1] 1.5748783 0.9948694 0.5971291 0.4164494The variance explained by each principal component is obtained by squaring these:

pca_var=pca$sdev^2

pca_var## [1] 2.4802416 0.9897652 0.3565632 0.1734301To compute the proportion of variance explained by each principal component, we simply divide the variance explained by each principal component by the total variance explained by all four principal components:

pve=pca_var/sum(pca_var)

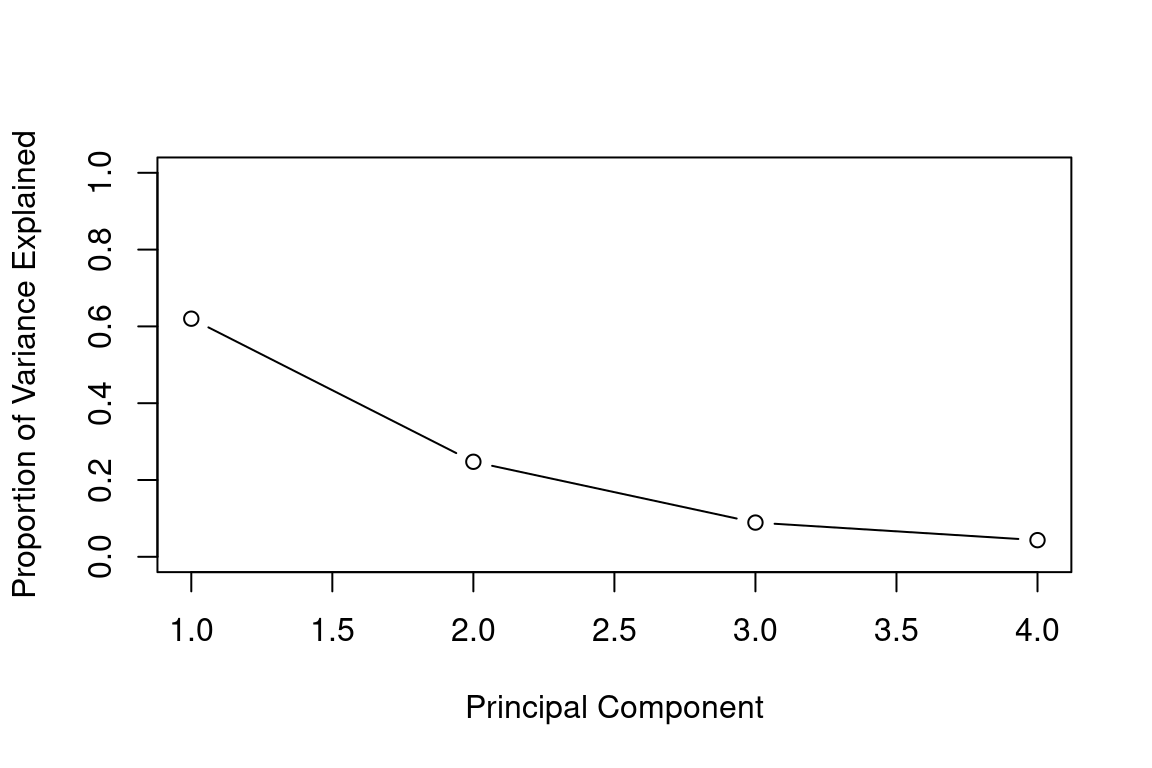

pve## [1] 0.62006039 0.24744129 0.08914080 0.04335752We see that the first principal component explains 62.0% of the variance in the data, the next principal component explains 24.7% of the variance, and so forth. We can plot the PVE explained by each component as follows:

plot(pve, xlab="Principal Component", ylab="Proportion of Variance Explained", ylim=c(0,1),type='b')

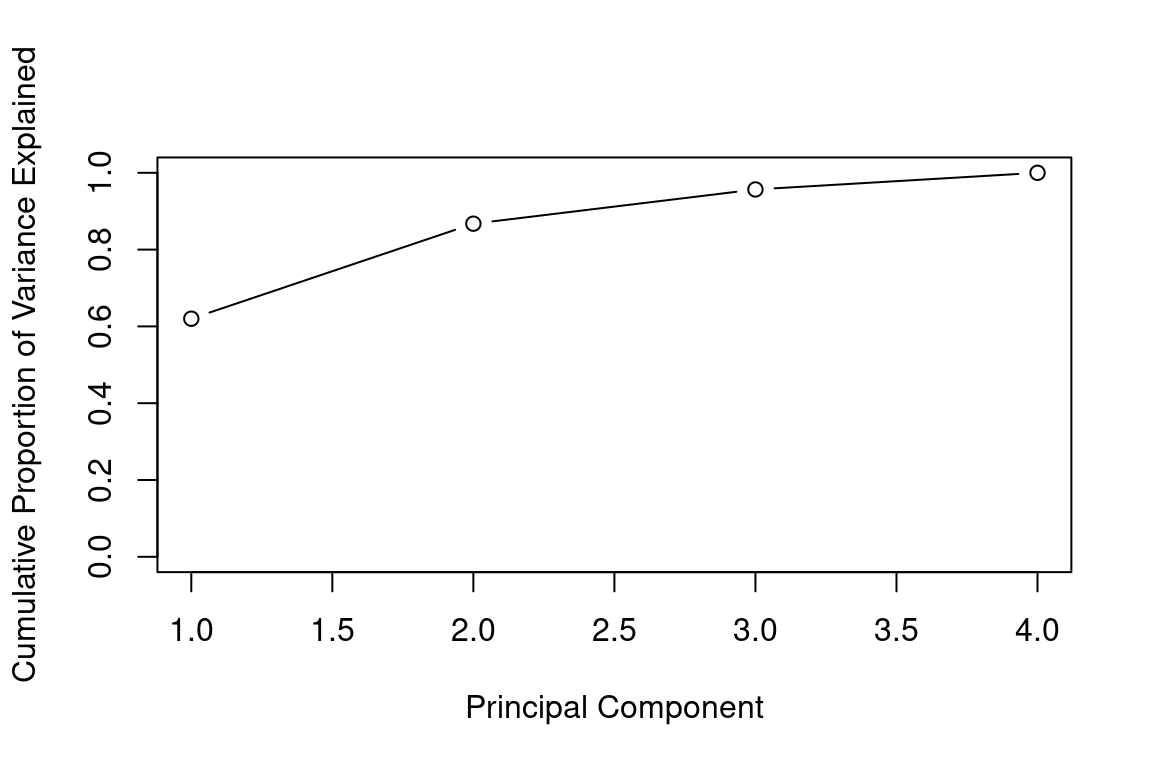

We can also use the function cumsum(), which computes the cumulative sum of the elements of a numeric vector, to plot the cumulative PVE:

plot(cumsum(pve), xlab="Principal Component", ylab="Cumulative Proportion of Variance Explained", ylim=c(0,1),type='b')

a=c(1,2,8,-3)

cumsum(a)## [1] 1 3 11 816.3.2 Example: SOA PA 6/12/19, Task 3

- (9 points) Use observations from principal components analysis (PCA) to generate a new feature

Your assistant has provided code to run a PCA on three variables. Run the code on these three variables. Interpret the output, including the loadings on significant principal components. Generate one new feature based on your observations (which may also involve dropping some current variables). Your assistant has provided some notes on using PCA on factor variables in the .Rmd file.

Already enrolled? Watch the full video: Practice Exams | Practice Exams + Lessons

16.3.3 Example: PCA on Cancel Cells

The data NCI60 contains expression levels of 6,830 genes from 64 cancel cell lines. Cancer type is also recorded.

library(ISLR)

nci_labs=NCI60$labs

nci_data=NCI60$dataWe first perform PCA on the data after scaling the variables (genes) to have a standard deviation one, although one could reasonably argue that it is better not to scale the genes:

pca = prcomp(nci_data, scale=TRUE)We now plot the first few principal component score vectors in order to visualize the data. The observations (cell lines) corresponding to a given cancer type will be plotted in the same color to see to what extent the observations within a cancer type are similar to each other. We first create a simple function that assigns a distinct color to each element of a numeric vector. The function will be used to assign a color to each of the 64 cell lines, based on the cancer type to which it corresponds. We will use the rainbow() function, which takes its argument a positive integer and returns a vector containing that number of distinct colors.

Cols=function(vec){

cols=rainbow(length(unique(vec)))

return(cols[as.numeric(as.factor(vec))])

}We now can plot the principal component score vectors:

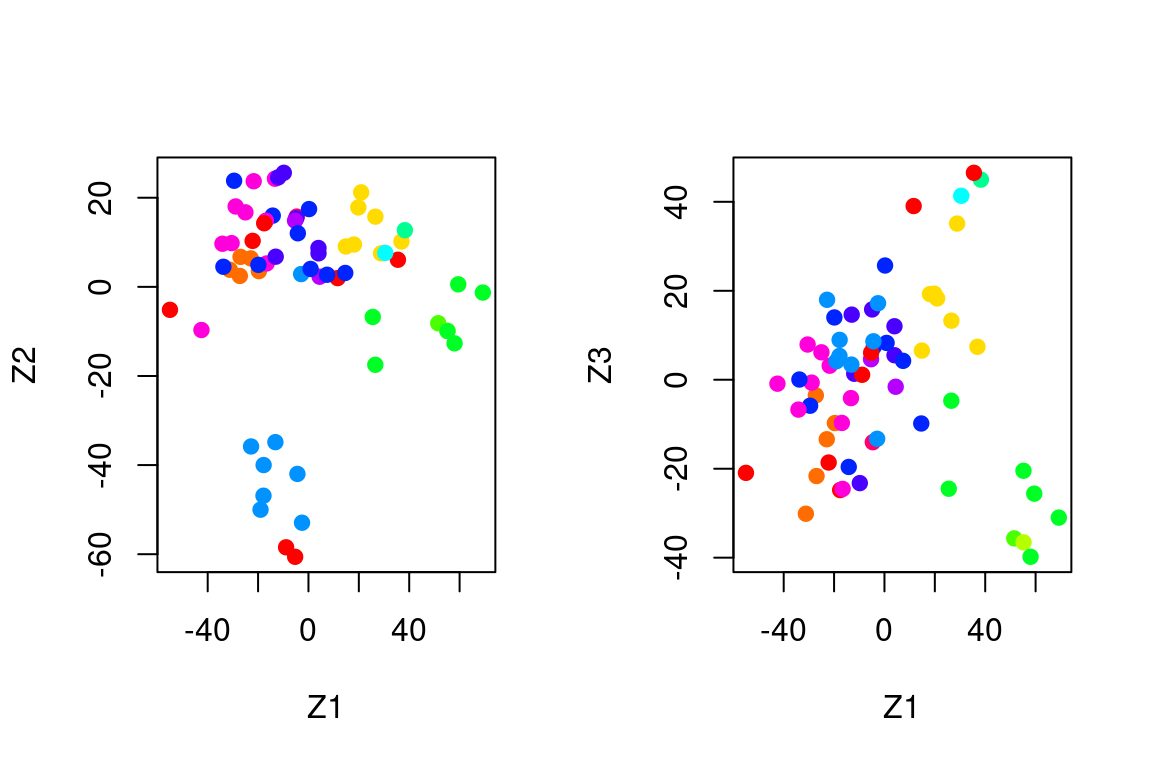

par(mfrow=c(1,2))

plot(pca$x[,1:2], col=Cols(nci_labs), pch=19,xlab="Z1",ylab="Z2")

plot(pca$x[,c(1,3)], col=Cols(nci_labs), pch=19,xlab="Z1",ylab="Z3")

On the whole, cell lines corresponding to a single cancer type tend to have similar values on the first few principal component score vectors.

This indicates that cell lines from the same cancer type tend to have pretty similar gene expression levels.

We can obtain a summary of the proportion of variance explained (PVE) of the first few principal components using the (PVE) of the first few principal components using the summary() method for a prcomp object:

summary(pca)## Importance of components:

## PC1 PC2 PC3 PC4 PC5 PC6 PC7 PC8 PC9

## Standard deviation 27.8535 21.48136 19.82046 17.03256 15.97181 15.72108 14.47145 13.54427 13.14400

## Proportion of Variance 0.1136 0.06756 0.05752 0.04248 0.03735 0.03619 0.03066 0.02686 0.02529

## Cumulative Proportion 0.1136 0.18115 0.23867 0.28115 0.31850 0.35468 0.38534 0.41220 0.43750

## PC10 PC11 PC12 PC13 PC14 PC15 PC16 PC17 PC18

## Standard deviation 12.73860 12.68672 12.15769 11.83019 11.62554 11.43779 11.00051 10.65666 10.48880

## Proportion of Variance 0.02376 0.02357 0.02164 0.02049 0.01979 0.01915 0.01772 0.01663 0.01611

## Cumulative Proportion 0.46126 0.48482 0.50646 0.52695 0.54674 0.56590 0.58361 0.60024 0.61635

## PC19 PC20 PC21 PC22 PC23 PC24 PC25 PC26 PC27 PC28

## Standard deviation 10.43518 10.3219 10.14608 10.0544 9.90265 9.64766 9.50764 9.33253 9.27320 9.0900

## Proportion of Variance 0.01594 0.0156 0.01507 0.0148 0.01436 0.01363 0.01324 0.01275 0.01259 0.0121

## Cumulative Proportion 0.63229 0.6479 0.66296 0.6778 0.69212 0.70575 0.71899 0.73174 0.74433 0.7564

## PC29 PC30 PC31 PC32 PC33 PC34 PC35 PC36 PC37 PC38

## Standard deviation 8.98117 8.75003 8.59962 8.44738 8.37305 8.21579 8.15731 7.97465 7.90446 7.82127

## Proportion of Variance 0.01181 0.01121 0.01083 0.01045 0.01026 0.00988 0.00974 0.00931 0.00915 0.00896

## Cumulative Proportion 0.76824 0.77945 0.79027 0.80072 0.81099 0.82087 0.83061 0.83992 0.84907 0.85803

## PC39 PC40 PC41 PC42 PC43 PC44 PC45 PC46 PC47 PC48

## Standard deviation 7.72156 7.58603 7.45619 7.3444 7.10449 7.0131 6.95839 6.8663 6.80744 6.64763

## Proportion of Variance 0.00873 0.00843 0.00814 0.0079 0.00739 0.0072 0.00709 0.0069 0.00678 0.00647

## Cumulative Proportion 0.86676 0.87518 0.88332 0.8912 0.89861 0.9058 0.91290 0.9198 0.92659 0.93306

## PC49 PC50 PC51 PC52 PC53 PC54 PC55 PC56 PC57 PC58

## Standard deviation 6.61607 6.40793 6.21984 6.20326 6.06706 5.91805 5.91233 5.73539 5.47261 5.2921

## Proportion of Variance 0.00641 0.00601 0.00566 0.00563 0.00539 0.00513 0.00512 0.00482 0.00438 0.0041

## Cumulative Proportion 0.93947 0.94548 0.95114 0.95678 0.96216 0.96729 0.97241 0.97723 0.98161 0.9857

## PC59 PC60 PC61 PC62 PC63 PC64

## Standard deviation 5.02117 4.68398 4.17567 4.08212 4.04124 2.148e-14

## Proportion of Variance 0.00369 0.00321 0.00255 0.00244 0.00239 0.000e+00

## Cumulative Proportion 0.98940 0.99262 0.99517 0.99761 1.00000 1.000e+00Using the plot() function, we can also plot the variance explained by the first few principal components:

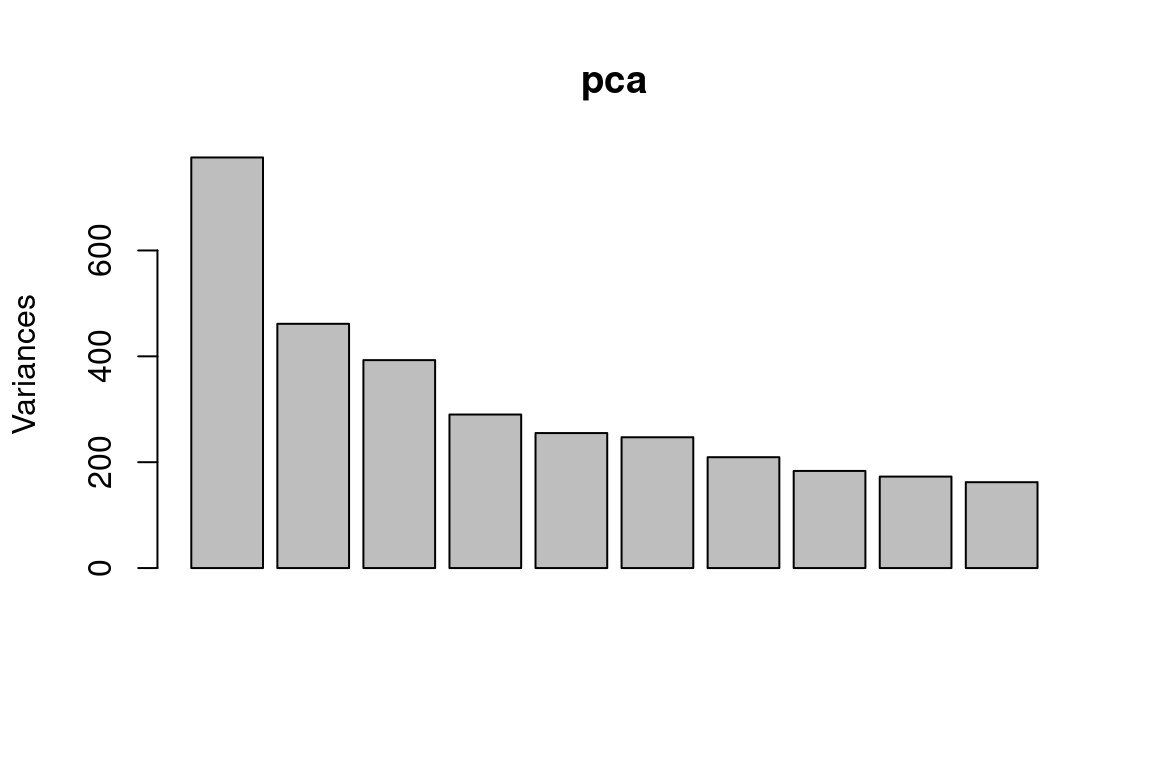

plot(pca)

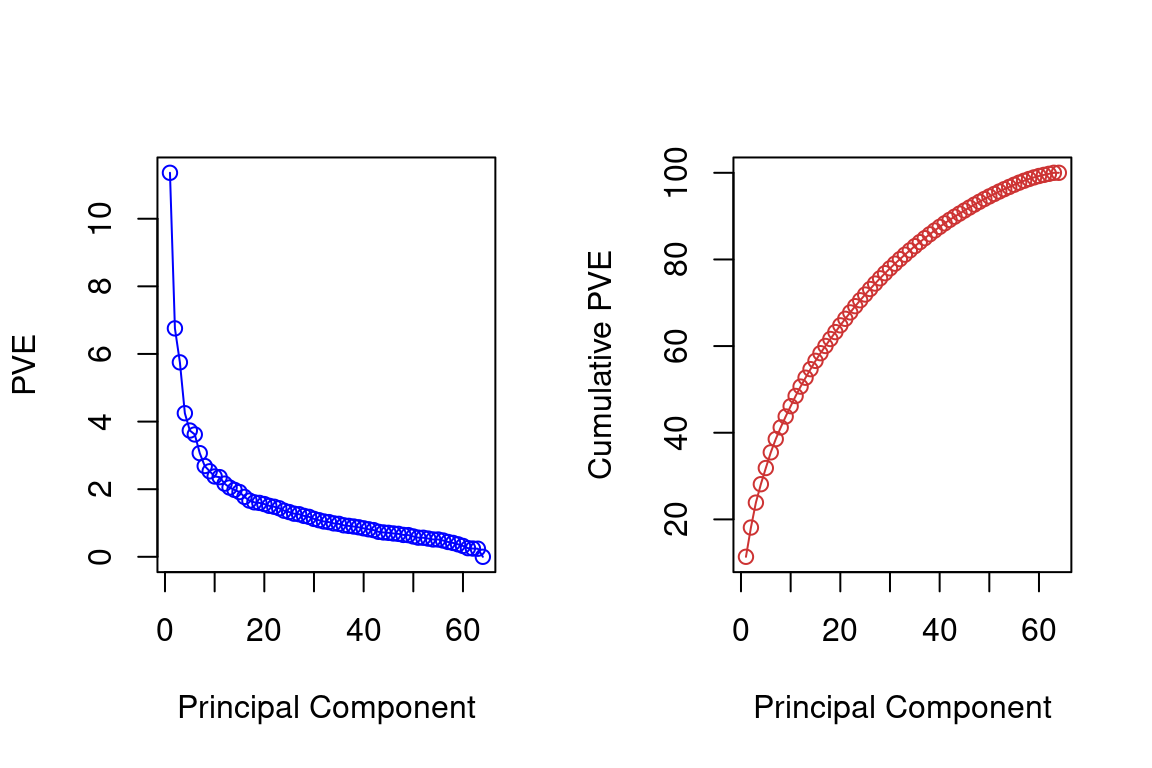

Note that the height of each bar in the bar plot is given by squaring the corresponding element of pr.out\$sdev. However, it is generally more informative to plot the PVE of each principal component (i.e. a scree plot) and the cumulative PVE of each principal component. This can be done with just a little tweaking:

pve = 100*pca$sdev^2/sum(pca$sdev^2)

par(mfrow=c(1,2))

plot(pve, type="o", ylab="PVE", xlab="Principal Component", col="blue")

plot(cumsum(pve), type="o", ylab="Cumulative PVE", xlab="Principal Component", col="brown3")

Together, we see that the first seven principal components explain around 40% of the variance in the data. This is not a huge amount of the variance. However, looking at the scree plot, we see that while each of the first seven principal components explains a substantial amount of variance, there is a marked decrease in the variance explained by other principal components. That is, there is an elbow in the plot after approximately the seventh principal component. This suggests that there may be little benefit to examining more than seven or so principal components (phew! even examining seven principal components may be difficult).

16.4 Clustering

Imagine that you are a large retailer interested in understanding the customer base. There may be several “types” of customers, such as those shopping for a business with corporate accounts, those shopping for leisure, or debt-strapped grad students. Each of these customers would exhibit different behavior and should be treated differently statistically. However, how can the “type” of customer be defined? Especially for large customer data sets in the millions, one can imagine how this problem can be challenging.

Clustering algorithms look for groups of observations that are similar to one another. Because there is no target variable, measuring the quality of the “fit” is much more complicated. There are many clustering algorithms, but this exam only focuses on the two that are most common.

16.5 K-Means Clustering

Kmeans takes continuous data and assigns observations into k clusters or groups. In the two-dimensional example, this is the same as drawing lines around points.

StatQuest. “ K-means clustering” YouTube, Joshua Starmer, 2 Apr 2018, https://www.youtube.com/watch?v=4b5d3muPQmA&t=268s

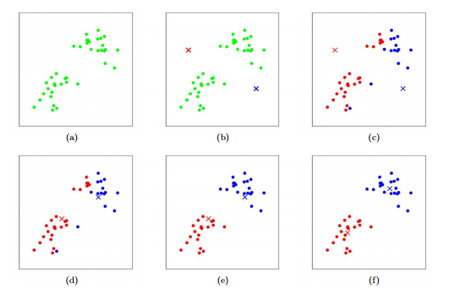

Kmeans consists of the following steps:

a) Start with two variables (\(X_1\) on the X-axis, and \(X_2\) on the Y-axis.)

b) Randomly assign cluster centers.

c) Put each observation into the cluster that is closest.

d) - f) Move the cluster center to the mean of the observations assigned to it and continue until the centers stop moving.

g) Repeated steps a) - f) a given number of times (controlled by n.starts). This reduces the uncertainty from choosing the initial centers randomly.

16.5.0.1 R Example

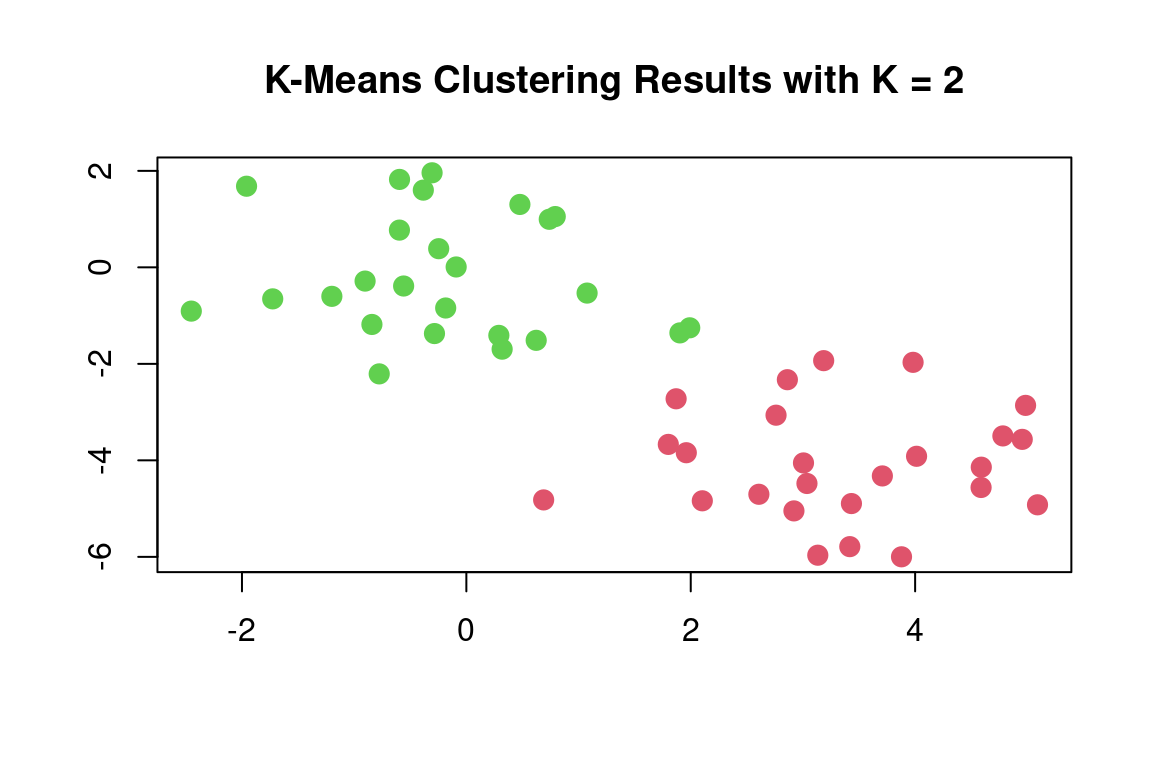

In R, the function kmeans() performs K-means clustering in R. We begin with a simple simulated example in which there truly are two clusters in the data: the first 25 observations have a mean shift relative to the next 25 observations.

set.seed(2)

x = matrix(rnorm(50*2), ncol = 2)

x[1:25,1] = x[1:25,1]+3

x[1:25,2] = x[1:25,2]-4We now perform K-means clustering with K = 2:

km_out = kmeans(x,2,nstart = 20)The cluster assignments of the 50 observations are contained in

km_out$cluster:

km_out$cluster## [1] 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2The K-means clustering perfectly separated the observations into two clusters even though we did not supply any group information to kmeans(). We can plot the data, with each observation colored according to its cluster assignment:

plot(x, col = (km_out$cluster+1), main = "K-Means Clustering Results with K = 2", xlab = "", ylab = "", pch = 20, cex = 2)

Here the observations can be easily plotted because they are two-dimensional. If there were more than two variables, we could instead perform PCA and plot the first two principal components score vectors.

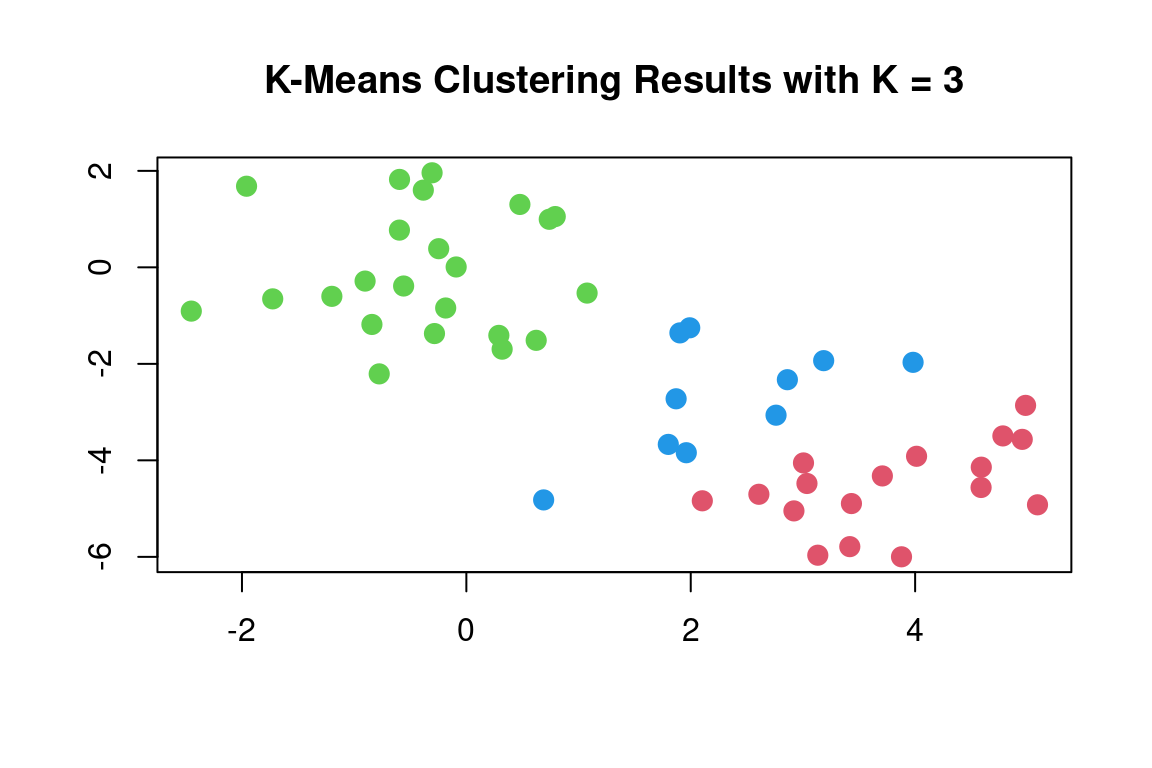

In this example, we knew that there were two clusters because we generated the data. However, for real data, in general, we do not know the true number of clusters. We could instead have performed K-means clustering on this example with K = 3. If we do this, K-means clustering will split up the two “real” clusters since it has no information about them:

set.seed(4)

km_out = kmeans(x, 3, nstart = 20)

km_out## K-means clustering with 3 clusters of sizes 17, 23, 10

##

## Cluster means:

## [,1] [,2]

## 1 3.7789567 -4.56200798

## 2 -0.3820397 -0.08740753

## 3 2.3001545 -2.69622023

##

## Clustering vector:

## [1] 1 3 1 3 1 1 1 3 1 3 1 3 1 3 1 3 1 1 1 1 1 3 1 1 1 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 3 2 3 2 2 2 2

##

## Within cluster sum of squares by cluster:

## [1] 25.74089 52.67700 19.56137

## (between_SS / total_SS = 79.3 %)

##

## Available components:

##

## [1] "cluster" "centers" "totss" "withinss" "tot.withinss" "betweenss"

## [7] "size" "iter" "ifault"plot(x, col = (km_out$cluster+1), main = "K-Means Clustering Results with K = 3", xlab = "", ylab = "", pch = 20, cex = 2)

To run the kmeans() function in R with multiple initial cluster assignments, we use the nstart argument. If a value of nstart greater than one is used, then K-means clustering will be performed using multiple random assignments, and the kmeans() function will report only the best results. Here we compare using nstart = 1:

set.seed(3)

km_out = kmeans(x, 3, nstart = 1)

km_out$tot.withinss## [1] 97.97927to nstart = 20:

km_out = kmeans(x,3,nstart = 20)

km_out$tot.withinss## [1] 97.97927Note that km_out\$tot.withinss is the total within-cluster sum of squares, which we seek to minimize by performing K-means clustering. The individual within-cluster sum-of-squares are contained in the vector km_out\$withinss.

It is generally recommended to always run K-means clustering with a large value of nstart, such as 20 or 50, to avoid getting stuck in an undesirable local optimum.

When performing K-means clustering and using multiple initial cluster assignments, it is also important to set a random seed using the

set.seed() function. This way, the initial cluster assignments can be replicated, and the K-means output will be fully reproducible.

16.6 Hierarchical Clustering

Kmeans required that we choose the number of clusters, k. Hierarchical clustering is an alternative that does not require that we choose only one value of k.

StatQuest. “ Hierarchical Clustering” YouTube, Joshua Starmer, 20 Jun, 2017, https://www.youtube.com/watch?v=7xHsRkOdVwo&t=137s

The most common type of hierarchical clustering uses a bottom-up approach. This starts with a single observation and then looks for others close and puts them into a cluster. Then it looks for other clusters that are similar and groups these together into a mega cluster. It continues to do this until all observations are in the same group.

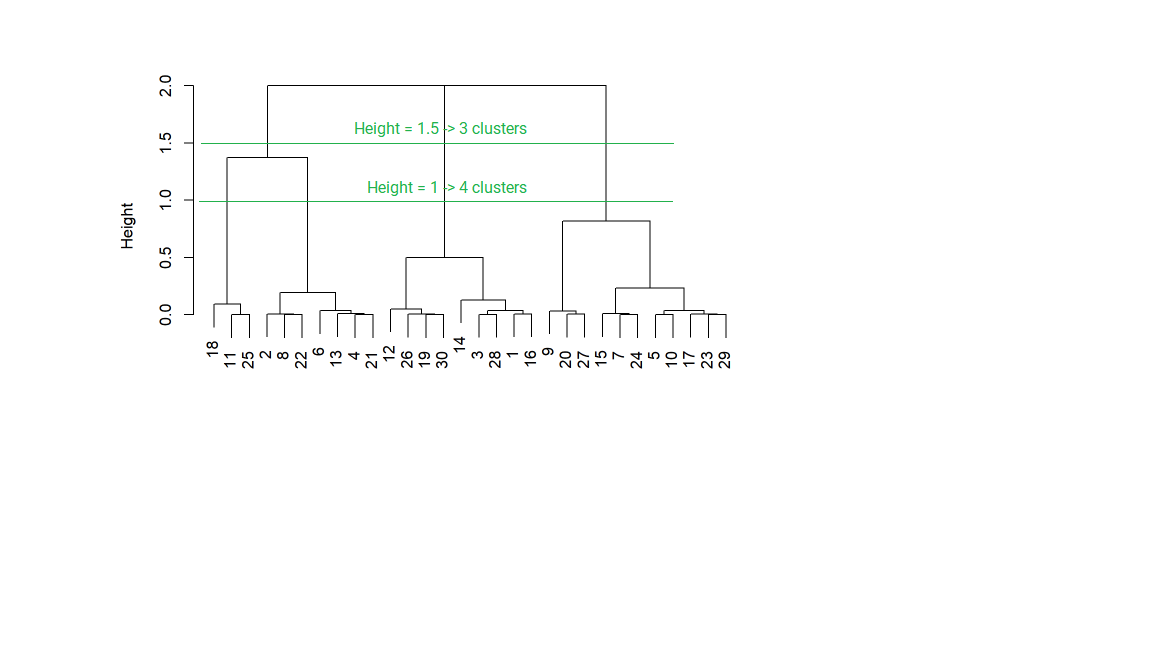

This is analyzed with a graph called a dendrogram (dendro = tree, gram = graph). The height represents “distance,” or how similar the clusters are to one another. The clusters on the bottom, which are vertically close to one another, have similar data values; the clusters that are further apart vertically are less similar.

Choosing the value of the cutoff height changes the number of clusters that result.

Certain data have a natural hierarchical structure. For example, say that the variables are City, Town, State, Country, and Continent. If we used hierarchical clustering, this pattern could be established even if we did not have labels for Cities, Towns, and so forth.

The hclust() function implements hierarchical clustering in R. In the following example; we use the data of previous section to plot the hierarchical clustering dendrogram using full, single, and average linkage clustering Euclidean distance as the dissimilarity measure. We begin by clustering observations using complete linkage. The dist() function is used

to compute the 50 \(\times\) 50 inter-observation Euclidean distance matrix:

hc_complete = hclust(dist(x), method = "complete")We could just as easily perform hierarchical clustering with average or single linkage instead:

hc_average = hclust(dist(x), method = "average")

hc_single = hclust(dist(x), method = "single")We can now plot the dendrograms obtained using the usual plot() function.

The numbers at the bottom of the plot identify each observation:

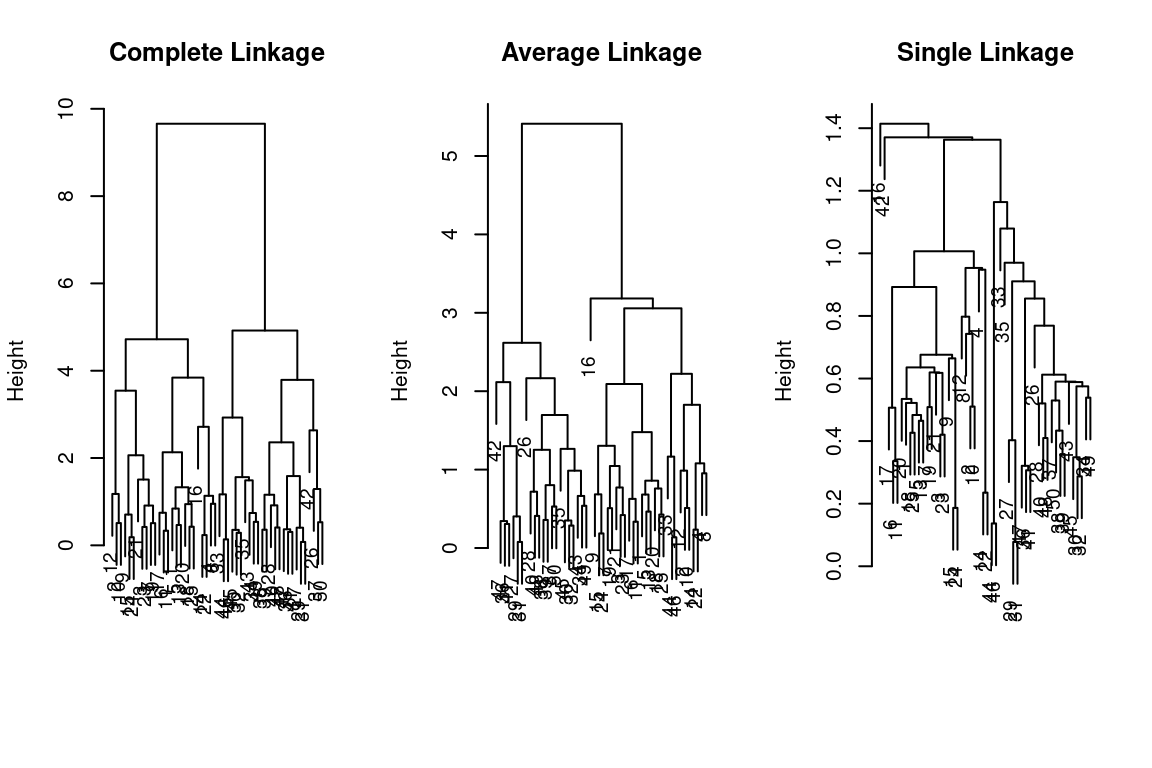

par(mfrow = c(1,3))

plot(hc_complete,main = "Complete Linkage", xlab = "", sub = "", cex = .9)

plot(hc_average, main = "Average Linkage", xlab = "", sub = "", cex = .9)

plot(hc_single, main = "Single Linkage", xlab = "", sub = "", cex = .9)

To determine the cluster labels for each observation associated with a given cut of the dendrogram, we can use the cutree() function:

cutree(hc_complete, 2)## [1] 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2 2cutree(hc_average, 2)## [1] 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 2 2 2 2 2 2 1 2 2 2 2 2 2 2 2 2 2 1 2 1 2 2 2 2cutree(hc_single, 2)## [1] 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1For this data, complete and average linkage generally separate the observations into their correct groups. However, single linkage identifies one observation as belonging to its own cluster. A more sensible answer is obtained when four clusters are selected, although there are still two singletons:

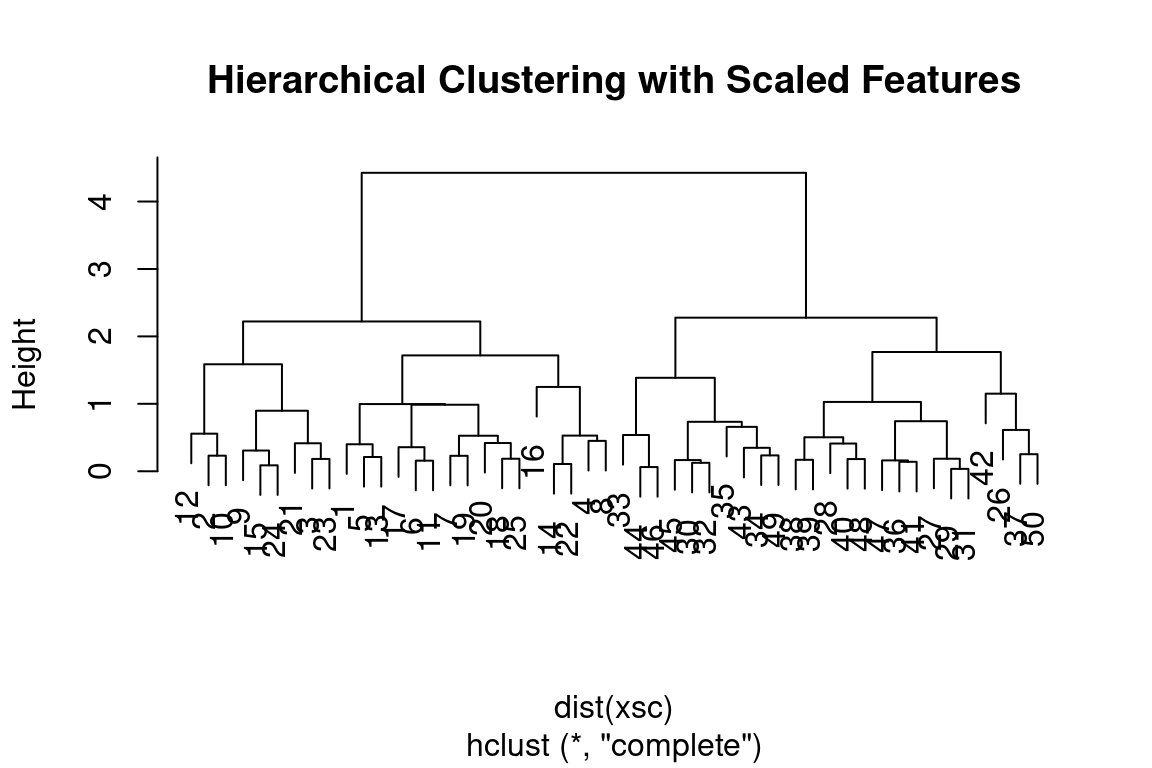

cutree(hc_single, 4)## [1] 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 2 1 1 1 1 1 1 1 1 1 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 3 4 3 3 3 3 3 3 3 3To scale the variables before performing hierarchical clustering of the

observations, we can use the scale() function:

xsc = scale(x)

plot(hclust(dist(xsc), method = "complete"), main = "Hierarchical Clustering with Scaled Features")

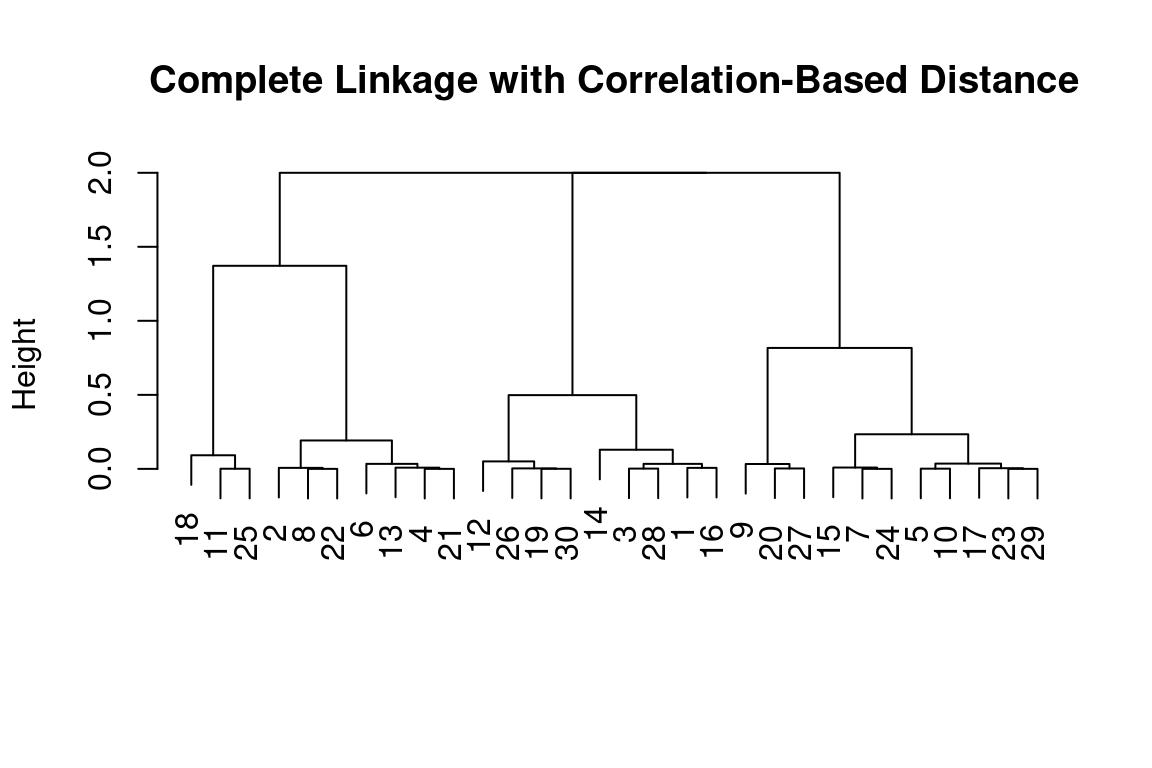

Correlation-based distance can be computed using the as.dist() function, which converts an arbitrary square symmetric matrix into a form that the hclust() function recognizes as a distance matrix. However, this only makes sense for data with at least three features since the absolute correlation between any two observations with measurements on two features is always 1. Let us generate and cluster a three-dimensional data set:

x = matrix(rnorm(30*3), ncol = 3)

dd = as.dist(1-cor(t(x)))

plot(hclust(dd, method = "complete"), main = "Complete Linkage with Correlation-Based Distance", xlab = "", sub = "")

16.6.1 Example: Clustering Cancel Cells

Unsupervised techniques are often used in the analysis of genomic data. In this example, we will see how hierarchical and K-means clustering compare on the NCI60 cancer cell line microarray data, which consists of 6,830 gene expression measurements on 64 cancer cell lines:

# The NCI60 data

library(ISLR)

nci_labels = NCI60$labs

nci_data = NCI60$dataEach cell line is labeled with a cancer type. We will ignore the cancer types in performing clustering, as these are unsupervised techniques. After performing clustering, we will use this column to see the extent to which these cancer types agree with the results of these unsupervised techniques.

The data has 64 rows and 6,830 columns.

dim(nci_data)## [1] 64 6830Let’s take a look at the cancer types for the cell lines:

table(nci_labels)## nci_labels

## BREAST CNS COLON K562A-repro K562B-repro LEUKEMIA MCF7A-repro MCF7D-repro

## 7 5 7 1 1 6 1 1

## MELANOMA NSCLC OVARIAN PROSTATE RENAL UNKNOWN

## 8 9 6 2 9 1We now proceed to hierarchically cluster the cell lines in the NCI60 data to determine whether or not the observations cluster into distinct types of cancer. To begin, we standardize the variables to have mean zero and standard deviation one. This step is optional and needs only be performed if we want each gene to be on the same scale:

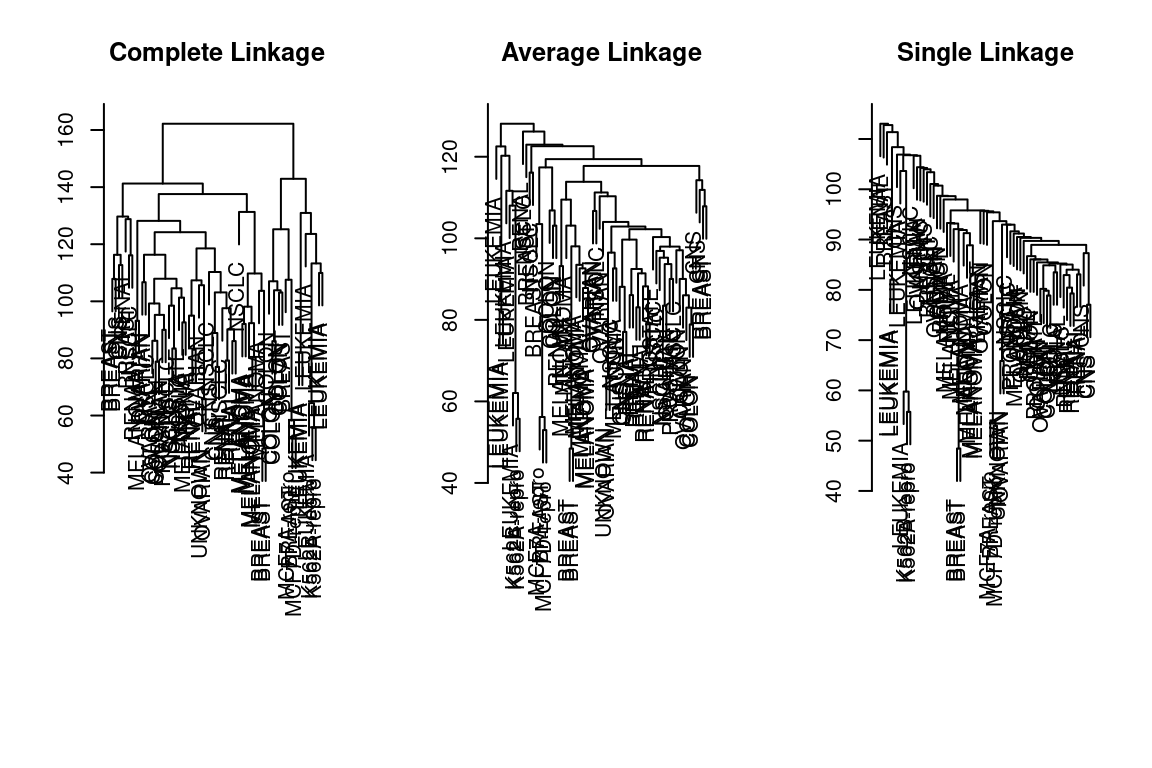

sd_data = scale(nci_data)We now perform hierarchical clustering of the observations using complete, single, and average linkage. We will use standard Euclidean distance as the dissimilarity measure:

par(mfrow = c(1,3))

data_dist = dist(sd_data)

plot(hclust(data_dist), labels = nci_labels, main = "Complete Linkage", xlab = "", sub = "",ylab = "")

plot(hclust(data_dist, method = "average"), labels = nci_labels, main = "Average Linkage", xlab = "", sub = "",ylab = "")

plot(hclust(data_dist, method = "single"), labels = nci_labels, main = "Single Linkage", xlab = "", sub = "",ylab = "")

We see that the choice of linkage certainly does affect the results obtained. Typically, a single linkage will tend to yield trailing clusters: very large clusters onto which individual observations attach one-by-one. On the other hand, complete and average linkage tends to yield more balanced, attractive clusters. For this reason, the complete and average linkage is generally preferred to single linkage. Cell lines within a single cancer type tend to cluster together, although the clustering is not perfect.

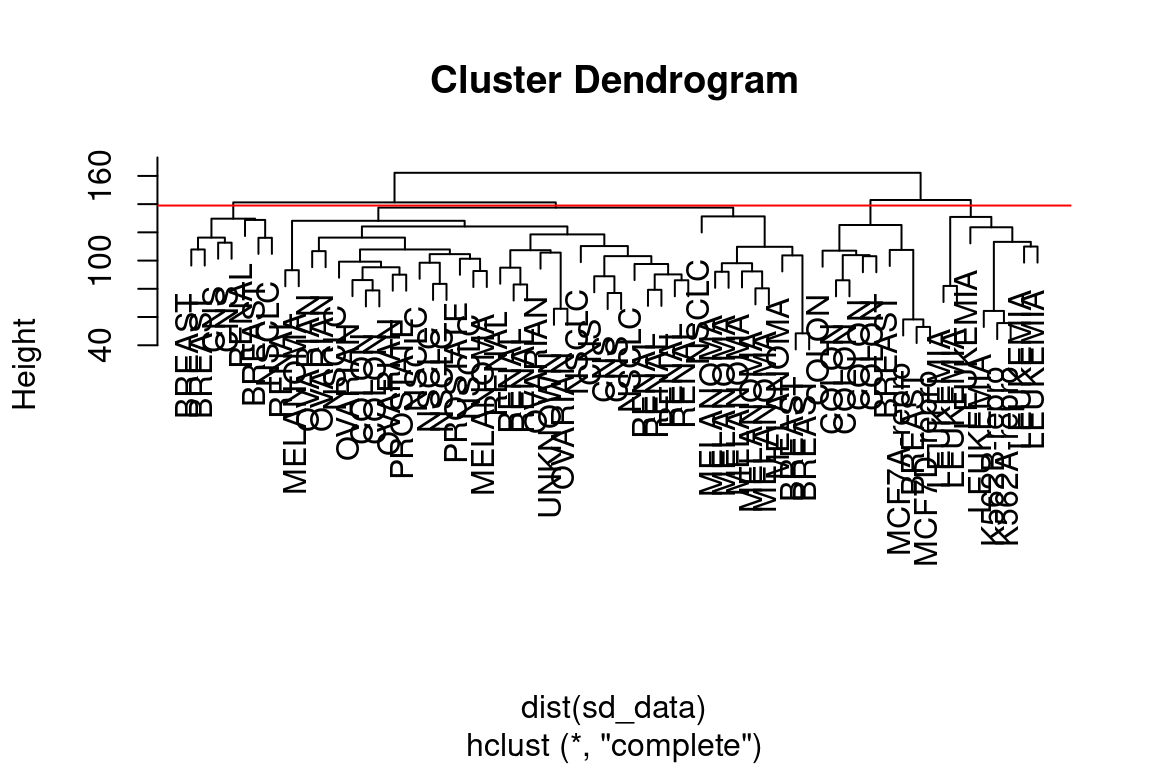

Let us use our complete linkage hierarchical clustering for the analysis. We can cut the dendrogram at the height that will yield a particular number of clusters, say 4:

hc_out = hclust(dist(sd_data))

hc_clusters = cutree(hc_out,4)

table(hc_clusters,nci_labels)## nci_labels

## hc_clusters BREAST CNS COLON K562A-repro K562B-repro LEUKEMIA MCF7A-repro MCF7D-repro MELANOMA NSCLC

## 1 2 3 2 0 0 0 0 0 8 8

## 2 3 2 0 0 0 0 0 0 0 1

## 3 0 0 0 1 1 6 0 0 0 0

## 4 2 0 5 0 0 0 1 1 0 0

## nci_labels

## hc_clusters OVARIAN PROSTATE RENAL UNKNOWN

## 1 6 2 8 1

## 2 0 0 1 0

## 3 0 0 0 0

## 4 0 0 0 0There are some clear patterns. All the leukemia cell lines fall in cluster 3, while the breast cancer cell lines are spread out over three different clusters. We can plot the cut on the dendrogram that produces these four clusters using the abline() function, which draws a straight line on top of any existing plot in R:

par(mfrow = c(1,1))

plot(hc_out, labels = nci_labels)

abline(h = 139, col = "red")

Printing the output of hclust gives a useful brief summary of the object:

hc_out##

## Call:

## hclust(d = dist(sd_data))

##

## Cluster method : complete

## Distance : euclidean

## Number of objects: 64We claimed earlier that K-means clustering and hierarchical clustering with the dendrogram cut to obtain the same number of clusters could yield very different results. How do these NCI60 hierarchical clustering results compare to what we get if we perform K-means clustering

with K = 4?

set.seed(2)

km_out = kmeans(sd_data, 4, nstart = 20)

km_clusters = km_out$clusterWe can use a confusion matrix to compare the differences in how the two methods assigned observations to clusters:

table(km_clusters,hc_clusters)## hc_clusters

## km_clusters 1 2 3 4

## 1 11 0 0 9

## 2 20 7 0 0

## 3 9 0 0 0

## 4 0 0 8 0We see that the four clusters obtained using hierarchical clustering and Kmeans clustering are somewhat different. Cluster 2 in K-means clustering is identical to cluster 3 in hierarchical clustering. However, the other clusters differ: for instance, cluster 4 in K-means clustering contains a portion of the observations assigned to cluster 1 by hierarchical clustering and all of the observations assigned to cluster 2 by hierarchical clustering.

16.6.2 References

These examples are an adaptation of p. 404-407, 410-413 of “Introduction to Statistical Learning with Applications in R” by Gareth James, Daniela Witten, Trevor Hastie, and Robert Tibshirani. Adapted by R. Jordan Crouser at Smith College for SDS293: Machine Learning (Spring 2016), and re-implemented in Fall 2016 tidyverse format by Amelia McNamara and R. Jordan Crouser at Smith College.

Used with permission from Jordan Crouser at Smith College and to the following contributors on GitHub:

- github.com/jcrouser

- github.com/AmeliaMN

- github.com/mhusseinmidd

- github.com/rudeboybert

- github.com/ijlyttle